Parsons Puzzle

Parson’s problems are available in Codio as Parsons Puzzles. Parson’s Puzzles are formative assessments that ask students to arrange blocks of scrambled code, allowing them to focus on the purpose and flow of the code (often including a new pattern or feature) instead of syntax. Codio uses js-parsons for Parson’s Puzzles.

Assessment Auto-Generation

Assessments can be auto-generated using the text on the current guide page as context. For more information, see the AI assessment generation page.

Assessment Manual Creation

Complete the following steps to set up a Line Based Grader Parsons Puzzle assessment. The Line Based Grader assessment treats student answers as correct if and only if they match the order and indentation found in Initial Values. For incorrect answers, it highlights the lines that were not ordered or indented properly. For more information on General, Metadata (optional) and Files (optional) see Assessments.

Complete General.

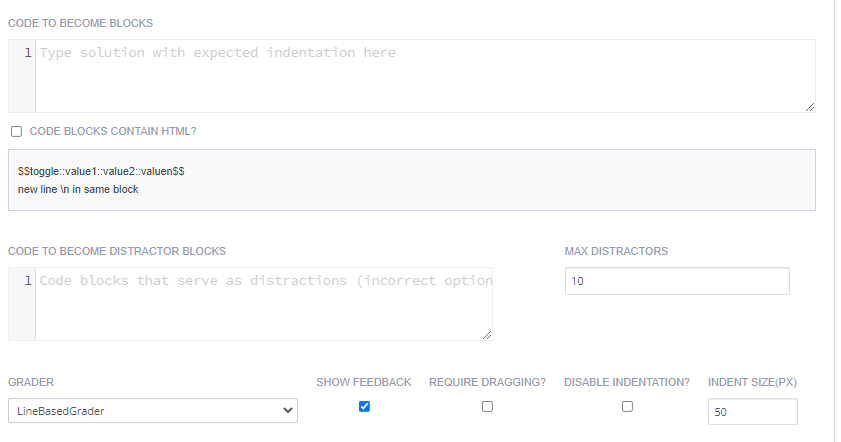

Click Execution in the navigation pane and complete the following information:

Option |

Description |

|---|---|

Code to Become Blocks |

Enter the code solution in the correct order using proper indentation. |

Code to Become Distractor Blocks |

Enter lines of code that serve as distractions. |

Max Distractors |

Enter the maximum number of distractors allowed. |

Grader |

Choose the appropriate grader for the puzzle from the drop-down list. For more information see Grader Options. |

Show Feedback |

Select to show feedback to students and highlight errors in the puzzle. Deselect to hide feedback and not highlight errors in the puzzle. |

Require Dragging |

If you enter Code to Become Distractor Blocks, Require Dragging will automatically turn on. Without distractor blocks, you can decide whether you want students to drag blocks to a separate area to compose their solution. |

Disable Indentation |

If you do not want to require indentation, check the Disable Indentation box. |

Indent Size |

Each indentation defaults to 50 pixels. |

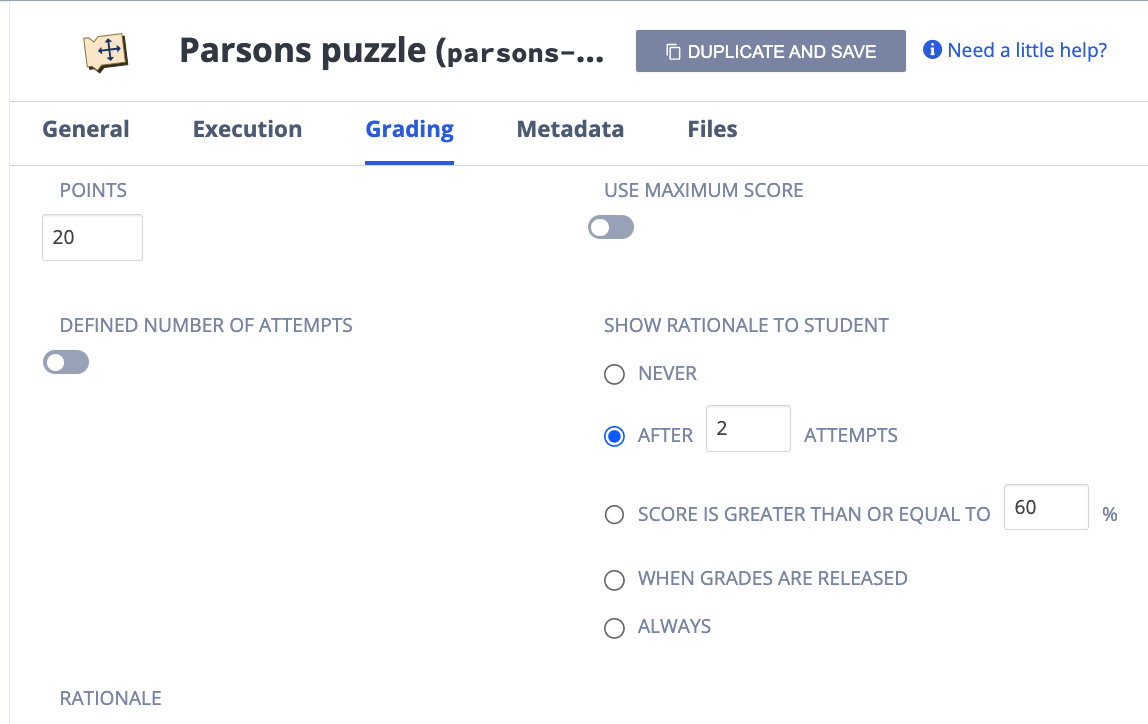

Click Grading in the navigation pane and complete the following fields:

Points - Enter the score if the student selects the correct answer. You can choose any positive numeric value. If this is an ungraded assessment, enter zero (0).

Define Number of Attempts - Enable to allow and set the number of attempts students can make for this assessment. If disabled, the student can make unlimited attempts.

Show Rationale to Students - Toggle to display the answer, and the rationale for the answer, to the student. This guidance information will be shown to students after they have submitted their answer and any time they view the assignment after marking it as completed. You can set when to show this selecting from Never, After x attempts, If score is greater than or equal to a % of the total or Always

Rationale - Enter guidance for the assessment. This is visible to the teacher when the project is opened in the course or when opening the student’s project. This guidance information can also be shown to students after they have submitted their answer and when they reload the assignment after marking it as completed.

Use Maximum Score - Toggle to enable assessment final score to be the highest score attained of all runs.

Click Create to complete the process.

Grader Options

VariableCheckGrader

Executes the code in the order submitted by the student and checks variable values afterwards.

Expected and supported options:

vartests(required) array of variable test objects

Each variable test object can/must have the following properties:

initcode- code that will be prepended before the learner solution codecode- code that will be appended after the learner solution codemessage(required) - a textual description of the test, shown to learner

Properties specifying what is tested:

variables- an object with properties for each variable name to be tested; the value of the property is the expected valueor

variable- a variable name to be testedexpected- expected value of the variable after code execution

UnitTestGrader

Executes student code and Skulpt unit tests. This grader is for Python problems where students create functions. Similar to traditional unit tests on code, this grader leverages a unit test framework where you set asserts - meaning this grader checks the functionality of student code.

LanguageTranslationGrader

Code translating grader where Java or psuedocode blocks map to Python in the background. Selecting the language allows the Parson’s problem to check for correct indentation and syntax.

TurtleGrader

This is for exercises that draw turtle graphics in Python. Grading is based on comparing the commands executed by the model and student turtle. If the executable_code option is also specified, the code on each line of that option will be executed instead of the code in the student constructed lines.

Note

Student code should use the variable myTurtle for commands to control the turtle in order for the grading to work.

turtleModelCode- The code constructing the model drawing. The turtle is initialized to modelTurtle variable, so your code should use that variable. The following options are available:turtlePenDown- A boolean specifying whether or not the pen should be put down initially for the student constructed codeturtleModelCanvas- ID of the canvas DOM element where the model solution will be drawn. Defaults to modelCanvas.turtleStudentCanvas- ID of the canvas DOM element where student turtle will draw. Defaults to studentCanvas.

Sample Starter Pack

There is a Starter Pack project - Demo Guides and Assessments that you can add to your account that includes examples of Parson’s Puzzle assessments. If not already loaded to your account (in your My Projects area), go to Starter Packs and search for Demo Guides and Assessments.